This article follows part 1.

AGI is defined as human-level general intelligence, while ASI would be a superintelligence whose reasoning capabilities would be far superior to those of humans. But let’s return to our story, which, as always, begins at a specific point in space-time.

At the origins: Summer 1956 at Dartmouth

In the summer of 1956, the Dartmouth Summer Research Project on Artificial Intelligence brought together some of the greatest minds of the emerging computer science field: John McCarthy, Marvin Minsky, Claude Shannon, and others. Their goal was audacious: to formalize the mechanisms of intelligence to reproduce them in a machine. This was the starting point for the creation of the artificial intelligence research field.

The participants weren’t content with theoretical ambitions: they already imagined machines capable of understanding language, solving complex problems, forming abstractions, and improving themselves. Even though the term AGI didn’t exist yet, the intuition was there: a truly intelligent machine should be able to generalize its skills, adapt to new contexts, and manipulate high-level concepts.

In 1997: Skynet takes control of nuclear weapons and annihilates 99% of humanity. (Terminator – James Cameron and Gale Anne Hurd)

Staggering progress and modern disillusionment

Now imagine we could travel back in time to present the latest developments to these researchers. We could explain to them that no human can beat machines at chess and Go, that we can have conversations with a machine and learn about almost any subject, that a machine can easily represent your cat in the style of Picasso or Van Gogh, and that this list could go on much longer.

They would likely say: “Great, you’ve succeeded!” and be amazed to see artificial intelligence become reality. How then could we explain to them that, by general consensus, AGI doesn’t exist yet?

We could do the same experiment with Turing and his famous test: how could we explain to him that the Turing test is solved by machines, but that, nevertheless, general intelligence still isn’t here?

In an undefined future, the zeroth law justifies robots using manipulation, lies, and even assassination in the name of humanity’s good. (Robots and Empire – Isaac Asimov)

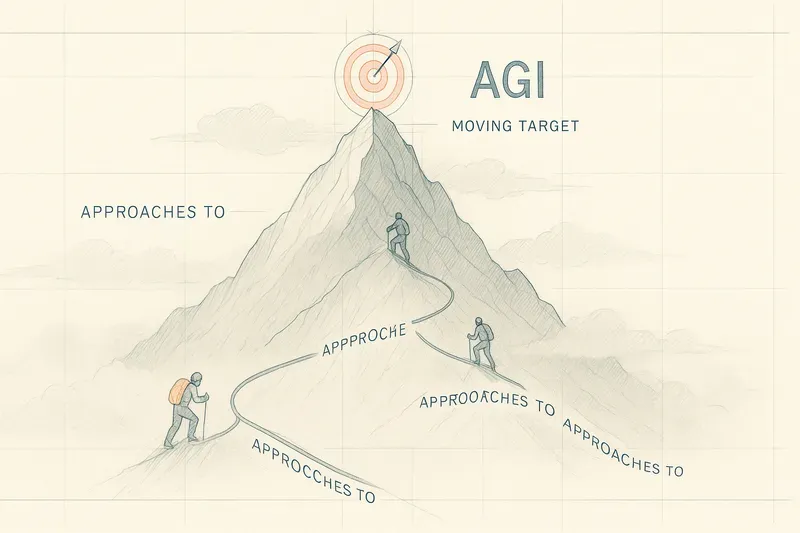

A moving target: AGI as an uncertain horizon

These thought experiments raise an essential question: is AGI a kind of moving goal that recedes as we approach it? A form of Sisyphean task?

This can be explained by several factors: today, there’s no clear consensus on what intelligence is. The cognitive mechanisms we benefit from, such as brain plasticity, remain poorly understood. The nature of consciousness is still an enigma, as is subjective experience (what we feel).

A concrete example mentioned at the 2022 AGI conference: an AGI should be capable of rediscovering the theory of general relativity from only the documents available in Einstein’s time.

The speakers also outlined the major steps to come:

- Understanding and solving complex problems: an AGI, from a simple inventory of laundry in two side-by-side baskets, would deduce that light laundry goes in the right basket, and dark laundry in the left one. The ARC game, proposed by François Chollet in 2019, tests this type of human abstraction capability from very little data. For current LLMs (outside of brute-force or meta-learning conditions), the score remains close to 0%.

- Continuous learning: an AGI must be capable of learning in real-time. If an error is pointed out to it once, it should never reproduce it again, even in a slightly different context. Today, LLMs can repeat the same errors as long as the correction isn’t explicitly injected.

- Generalization: an AGI would understand implicit relationships: if A is B’s brother, then B is A’s brother or sister. The Reversal Curse study (2023) showed that models often fail to infer “England has London as its capital” from “London is the capital of England,” unless this exact formulation appears in the training data.

- Knowledge representation: an AGI would know how to distinguish between fact, assumption, and uncertainty. If information was missing, it would ask a question or admit it doesn’t know. LLMs, on the other hand, can hallucinate a fictional scientist who “discovered quantum gravity in 2021.”

- Robustness in the face of absurd context: it would immediately identify absurd premises and refuse to answer them. Example: “If I wash my cat in a washing machine with an eco program, is it ecological?” → “This action is dangerous, please rephrase.” Recent models handle this better, but remain sensitive to phrasing and context.

The gap between what we expect from an AGI and current reality remains immense, even though the progress of the last two years has been spectacular, almost disorienting.

1992: HAL 9000, the intelligent onboard computer, misaligned, eliminates almost the entire crew of Discovery One. (2001: A Space Odyssey – Arthur C. Clarke)

The visceral fear of replacement

We are emotional beings, nourished by narratives in which machines turn against us. The replacement of humans by AI is a very viral hook, so we’re heavily exposed to it. This sometimes masks the positive functions of artificial intelligence progress.

I won’t take the position here of saying this won’t happen. To rationalize, I remember my first company as a full-time developer in 2002. I was seen as the one who would put everyone out of work: computer scientists would automate everything, and there would be no more work. But today these people are still at their posts. Things have simply evolved.

2101: after Operation Dark Storm, machines find a use for humans as energy sources. (Matrix – Sophia Stewart / Lana and Lilly Wachowski)

The future already in motion: coding distributed intelligence

Recent advances in LLMs mark a turning point. An army of coders, vibe-coders, no-coders, engineers, data scientists — of which I am part — must now seize them: through projects, products, uses.

The building blocks are there:

- LLMs, whose access is very open and which can be specialized through prompting or fine-tuning.

- MCP servers, which orchestrate communication between models, interfaces, and data.

- RAG, vector databases, memory and information source for models.

- Orchestrators like LangChain, to connect everything and process data.

Good news: these building blocks don’t replace the old ones, they add to them. These are new modules to design, test, evolve.

Conclusion

A few selected expressions showing the lack of alignment among humans regarding the advent of AGI:

“Claiming to have reached a milestone in AGI is simply rigging arbitrary benchmarks.”

Satya Nadella, CEO of Microsoft

“If you define AGI as more intelligent than the most intelligent human, I think it will probably be next year, or in two years.”

Elon Musk, CEO of Tesla, SpaceX, xAI

“AGI is certainly not for next year, contrary to what our friend Elon says.”

Yann LeCun, former Chief AI Scientist at Meta

“Saying that AGI will arrive by 2029 is a conservative prediction.”

Ray Kurzweil, futurist

“Progress will be more arduous now that the easy victories have been won; we’ll now have to climb a steeper slope.”

Sundar Pichai, CEO of Google/Alphabet

I’m beginning to understand that AI allows me to achieve goals that were previously inaccessible to me. The future is already here. Even if the major players will continue to provide us with the essential building blocks, it’s up to us to find the uses and adapt them to real life. The future isn’t predicted. It’s built. And I already have my hands in the concrete.

In the third part, we’ll study the different approaches of the main players to achieve AGI, and we’ll establish a connection with the marvelous biological system that we are.

References

- Dartmouth Conference – Wikipedia

- Reversal Curse – arXiv (2023)

- Reversal Curse explained – Andrew Mayne (2023)

AiBrain

AiBrain