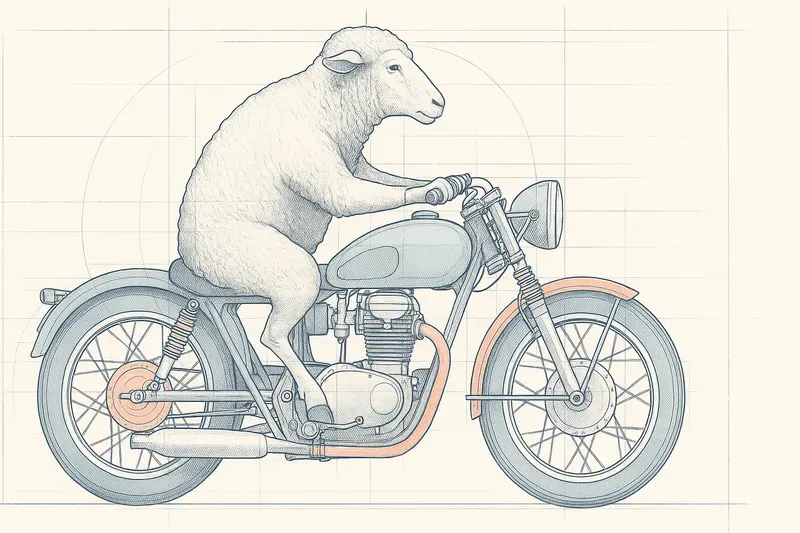

The image generation models known today use a diffusion model. Today, we’ll discover the details of processing the request: “Draw me a sheep riding a motorcycle”.

The painter stands before their blank canvas, facing the void to be filled. With a broad gesture, they begin by laying down a uniform background, a neutral tone that will serve as the foundation for the world they’re about to create. Then come the first strokes: light lines, almost hesitant, that define the general composition — a body, a landscape, a scene in the making. Gradually, forms take shape. They add shadows, lights, volumes, shape depth, texture, atmosphere. Each brushstroke refines reality. And when they touch on the final details — a reflection in the eye, a lock of hair, a vein on a hand — the image, finally, comes to life.

How is the image generated?

It’s a bit the same approach followed by diffusion models. We start with a completely random image containing noise (points scattered randomly across the image). A multi-step process will then occur. At each step, the model will refine the image, guided by the prompt, will modify the image so that, in the blur, masses appear, then make several elements emerge from these masses: wheels and body for the motorcycle, legs and body for the sheep, then define spaces and add detail. At each step, the final image emerges from the blur.

However, we need to specify an important difference between the painter and the AI here: when they begin their canvas, the painter (in most cases) knows what they’re doing, which is not the case for our model, which only performs mathematical operations.

Ok, now that we’ve seen how this happens, we need to tackle the question of how it manages to do these kinds of things.

First, we need to understand that the request “Draw me a sheep riding a motorcycle” will be processed by a first model, which has been trained with words and images. So it knows what a motorcycle and a sheep look like, but also what “riding a motorcycle” looks like.

The second model, the one in charge of creating the image, will therefore start from a random image and, at each rendering step, compare its image with the information given to it by the text model. It reinforces useful forms, attenuates inconsistencies, and refines details, until the image truly embodies what the sentence says.

How are the models trained?

For the text/image model, it’s rather simple. It has absorbed millions of described images:

- An image of a sheep → “a sheep”

- An image of a motorcycle → “a motorcycle”

- An image of a motorcyclist → “a man on a motorcycle”

So it has the ability to transform the prompt into a deep representation of what the image should contain, at the level of objects, interactions, but also style or emotion.

For the diffusion model, it’s a bit more complex to grasp. It will also digest millions of images with their prompts. Its job is to add blur to images until they become completely random. It’s by analyzing this process, step by step, that it will learn to remove the blur brilliantly.

The Nerd Zone - Inside the mathematical mind of AI 🤓

If you liked the painter analogy, but you’re wondering what these “mathematical operations” and “information” transmitted between models really are, you’re in the right place. Welcome to the Nerd Zone. Here, we lift the hood to look at the engine: embeddings and latent space.

The language of AI: everything is just vectors

An AI model doesn’t understand words, concepts, or image pixels like we do. Its only language is mathematics. For an idea like “a sheep” to be processed, it must be translated into a form that the AI can manipulate: a vector.

Words and images will therefore be translated by our models in the form of vectors, also called embeddings. Vectors have an obvious mathematical advantage: they can serve as coordinates in complex spaces. We thus see a geometry of meaning emerge, where sheep and ewe are close, but quite far from the concept of motorcycle. The whole strength of current models is that these embeddings are capable of capturing deep representations of elements, which allows complex concepts to be manipulated mathematically.

A particularly telling example: "king" - "man" + "woman" ≈ "queen". If we remove the notion of man from king, we get the notion of royalty which, applied to woman, gives queen.

That said, let’s return to our sheep (and its motorcycle):

- Our first model will build a prompt embedding that will express this idea: “sheep in the process of executing the action of riding a motorcycle”.

- The diffusion model will therefore prepare a random vector space (latent space). At each iteration, it will perform a limited number of modifications to this space to make appear, in certain zones, the characteristics provided by the prompt vector. For the rest — the road, the decor, the color of the motorcycle — the diffusion model will follow the predictions acquired during its training.

- Once the latent space is finalized, the third model in the band will transform these coordinates into a pixel-made image.

The little extras

- The “seed”: it corresponds to the random image generated at the base. And since it’s a statistical process, the same seed with the same prompt will give the same image. Hence the idea of keeping the seed and slightly modifying the prompt to obtain similarities in the image.

- Guidance: when generating an image, we can associate a guidance score. This will influence how much the model will integrate the prompt elements into the image.

AiBrain

AiBrain