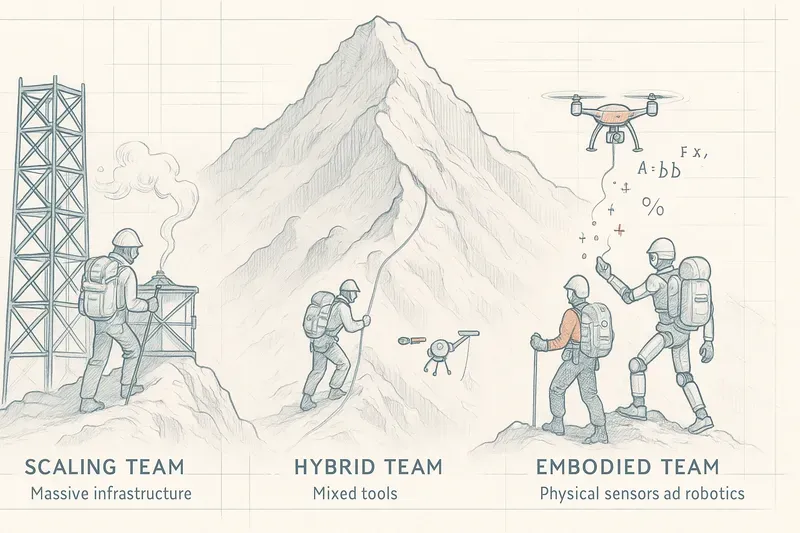

As we saw in part 2, we haven’t yet reached artificial general intelligence (AGI). It’s not even really defined. In today’s exercise, we’ll therefore envision it as a mountain to climb, whose summit is lost in the mist.

Here we are at the base camp of modern conversational AIs. The first passes and peaks of the AI mountain have already been conquered. We now find three major teams, ready to continue the ascent.

The Teams

The Cloud Big Spenders (the law of the strongest)

They bet on scaling up current models, and their motto is “always more.” On the agenda: more servers, more data, more time, more parameters… and a pair of sunglasses to avoid being too dazzled by the training cost bill. Their faith rests on the idea that increasing model complexity will allow the emergence of new capabilities, opening the way to a form of reasoning advanced enough to be qualified as AGI.

But this path, as vertiginous as it is seductive, is not without pitfalls. Continuous scaling leads to colossal training costs, exponential energy consumption, and dependence on ultra-concentrated infrastructure, reserving this strategy for a handful of players capable of burning hundreds of millions of dollars per iteration. And nothing guarantees that simply growing bigger will allow crossing the threshold of abstract reasoning, self-awareness, or contextual adaptation.

The “Hybridists” (intuition and reasoning)

The symbolic approach is at the origin of the first artificial intelligence tools: expert systems. They rely on a clearly defined set of rules and are capable of processing information according to these rules. Example: a symbolic system can analyze a structured medical file and deduce possible diagnoses by applying a set of clinical rules.

The hybridists know that conversational AIs lack that little extra something: a genuine reasoning capacity in the human sense of the term. Their goal is to combine the ability of language models to recognize patterns and extract facts from unstructured data, with genuine layers of symbolic reasoning. This approach ensures transparency in decision-making processes.

However, the hybridist approach faces the difficulty of articulating fuzzy statistical models with strict symbolic logics, making integration often unstable. In parallel, symbolic coding requires intensive manual work: defining rules, maintaining their coherence, and covering all useful cases remains costly and difficult to scale up.

The Corporealists (action-reaction)

Here, no compromise: true intelligence cannot be purely digital. One must see, feel, interact with the physical world to be among the enlightened beings. Among the corporealists, emphasis is placed on embodied AI learning from experiences lived in the real world. Purely digital AIs would inevitably hit the “reality gap.” Understanding the real world, as humans possess it, goes far beyond abstract data patterns. It requires anchoring in sensorimotor experience, continuous body perception, and permanent adaptation capacity.

It’s essential to understand that to be truly embodied, a system cannot be content with being just a robot. It needs consciousness of its own body, perception of its environment, and the ability to understand and act based on what it perceives, adapting its actions autonomously and contextually.

But this path, as embodied as it may be, is not without very real physical constraints. Developing an AI that learns in the world involves managing imperfect sensors, sometimes critical reaction times, unpredictable environments—all factors that make learning heavy, slow, and costly. Unlike textual models, which can ingest billions of data in a few days, an embodied AI must literally live each experience, one by one, which considerably slows its progress.

The Protagonists in the AGI Race: Each Their Own Path

At this stage of the ascent, it’s no longer just about groups of researchers or theoretical ideas: the great AI powers are now embodied by companies that, each in their own way, have chosen their path to climb the artificial general intelligence mountain.

OpenAI

Embodies by itself the momentum of the “Cloud Big Spenders.” Their approach is clear: increase scale, always, again, until reaching an emergence point where intelligence would spring forth on its own. GPT-3, GPT-4, GPT-4o, so many milestones toward this invisible summit. But an inflection is emerging: with GPT-4o, voice, vision, real-time dialogue are making their appearance. OpenAI is beginning to move beyond pure text, exploring the first logics of software embodiment without crossing the robotics threshold, but by expanding the senses.

DeepMind (Google)

The strategy is more layered. Scaling hasn’t been abandoned—quite the contrary, Gemini and work on Chinchilla’s law show their mastery of large architectures. But DeepMind is also one of the few to invest so seriously in embodied intelligence, notably with PaLM-E, a model that perceives, understands, and acts in the physical world. Add to this their symbolic experiments (like AlphaCode) and you get a vision that seeks to federate the three approaches, in a form of ambitious scientific unification.

Meta

Has positioned itself almost frontally in the corporealist camp. For them, AI isn’t limited to answering questions, it must inhabit the world: see in first person (Ego4D), navigate complex environments (Habitat), touch and feel (with DIGIT). Meta considers that cognition begins in the senses, and that AGI cannot exist without sensorimotor anchoring. This is a strong positioning, still little visible in their language models like LLaMA, but deeply structuring in their long-term vision.

Anthropic

Their approach, more discreet and philosophical, is based on the necessity of making models safer, more understandable, more governable. With Claude, they pursue the scaling logic, but also introduce notions of structured reasoning, internal rules, a form of diffuse symbolism, not coded but integrated into the architecture. Their “Constitutional AI” evokes an LLM that thinks by following principles: a still hybrid but promising path.

Mistral

On the European side, Mistral has established itself as a minimalist but sharp player. No robotics, no meta-structure, little discourse on AGI but formidable efficiency in building powerful and sober open-source models. Their strategy clearly fits into the scaling camp, with a touch of algorithmic elegance. Mistral plays the card of performative sobriety, staying focused on brute engineering, without dispersing.

Tesla

More unexpectedly, Tesla is probably one of the most radically corporealist companies today. Their autopilot system doesn’t rely on pre-established rules or fixed maps, but on an embedded neural network, fed by the experience of millions of kilometers. Here, the car becomes a learning body, an adaptive agent. With the Optimus project, a humanoid robot, Tesla extends this logic to human locomotion. AGI, for them, is already embodied, even if it remains silent for now.

IBM

IBM, for its part, remains faithful to its symbolic roots. Heir to expert systems, it continues to develop hybrid tools for health, law, or industry. At IBM, intelligence is a matter of logic, causality, rules: no large multilingual model that guesses, but architectures that reason, explain, and justify. In a world obsessed with emergent effects, IBM reminds us that clarity remains a form of power.

The Rebels of Alternative Architectures

(The Forgotten Paths of the AGI Mountain)

Away from the main routes, a few solitary climbers advance in silence. Their conviction? The AGI summit is conquered neither by brute force nor by the avalanche of parameters. Their battle cry is simple: “Human intelligence consumes 20 watts, not a nuclear power plant!”

Figures like Yann LeCun or Juergen Schmidhuber defend a radical approach: energy frugality, self-supervised learning, and brain-inspired architectures. No text to gobble, no billions of weights, just the elegance of a system that learns like a child: by observing, acting, simulating.

Lower down, the neuromorphics, with SpiNNaker or Loihi, dream of circuits that think like neurons: sober, efficient, decentralized. Their goal: to free AI from the cloud and anchor it in silicon.

Why listen to them? Because they remind us of the obvious: adding parameters isn’t understanding. And what if, by aiming for the summit with billions, we were climbing… the wrong mountain?

Conclusion

All this information makes me realize how evolved and formidably efficient human intelligence is, if only through its capacity to imagine, project, and advance each day into still unclear territories.

I remain intimately convinced that AI, and even more so the quest for AGI, is leading us not toward a purely technical revolution, but toward a profoundly human revolution.

“Intelligence is not only knowing, it’s also knowing what to do with knowledge.”

Isaac Asimov — Robots and Empire

AiBrain

AiBrain