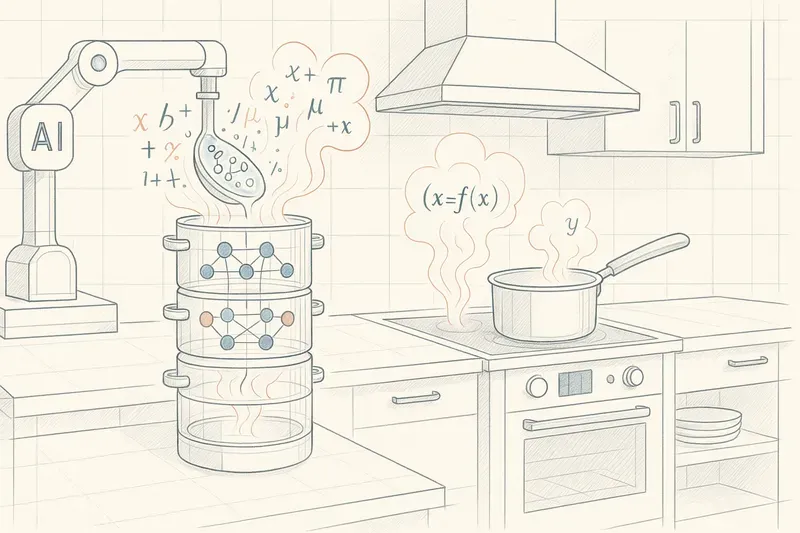

Have you ever wondered how a model like GPT works? Forget complex lines of code and imagine yourself in the kitchen. Here’s the recipe for a successful LLM, explained like a high-end algorithmic gastronomy dish.

You’ll need:

- Lots of varied texts (books, articles, conversations… even some Asimov), the equivalent of 3 million dictionaries.

- A large basket of words and fragments (from “cat” to “meow” through “purr” and “curious”).

- A multi-layer flavor distiller (transformer neural layers): each layer acts like a cutting-edge flavor extractor, meticulously analyzing word mixtures. They break down raw information, filter out what’s less relevant, and isolate crucial “meaning molecules,” thus revealing hidden connections and flavors between words.

- A pinch of attention (to marry flavors).

- A micro-batch oven (to cook progressively, like a patient pastry chef).

Step 1: Chop the text (Tokenization)

Take all the words present in the text and assign each one an identifier; if needed, cut them if they’re too long (like “chocolate,” which can be separated into “choco” and “late”).

Step 2: Marinate the pieces (Embeddings)

Dunk the “cat” portion in a random marinade of 1,536 flavors (a long list of mysterious numbers), which will capture all its characteristics.

Step 3: Create flavor marriages (The attention mechanism)

It’s time to adjust each word’s marinade. In the sentence “The cat sleeps on the carpet while the rocket launches.”, each word will be connected to the others. As cooking progresses, certain words will come closer together, like “cat” and “sleeps”: a perfect harmony, like honey and lemon. “Cat” and “carpet” will also get a good harmony, like cheese and wine. “Cat” and “rocket”, on the other hand, won’t marry well, like water and oil, even though they’re present together in this sentence. This process, repeated for each word many times, weaves deep semantic relationships: “cat” naturally associates with “purr” or “fur,” but distances itself from “Xfile”.

Step 4: Cook in small batches (Training)

Predict, taste, adjust: the secret of a successful dish in high-end algorithmic gastronomy. We’ll associate our huge library in packets of 512 sentences, and make the model guess the next word in the sentence. If, for the sentence “the cat meows”, the prediction is “the dog barks”, then we’ll adjust the multi-layer distiller settings and the “meows” marinade so it’s preferentially found behind the word cat. Repeat thousands of times, until all words’ flavors are perfectly balanced.

Step 5: Garnish with generation (Plating)

Ask your favorite LLM “Tell me a cat story.” The model will draw from “cat” flavors and find connections with “curious,” “mouse,” “night”; it adds a hint of random choices and the story emerges: “A cat named Pixel loved exploring rooftops…” Serve warm.

Conclusion: The Final Dish 🍲

GPT is a simmered word soup:

- Chopped into units,

- Marinated in digital flavors,

- Linked by flavor marriages,

- Cooked in batches.

As Auguste Gusteau often says: “Anyone can code… but only a good model knows how to tell a good cat story.”

The Expert Corner 🤓

You’re entering the “Nerd Zone” — entry is not forbidden to the general public, but exit is not guaranteed!

Transformers / Semantic distillers

Technical definition: Transformers are a neural network architecture, introduced by Vaswani et al. in 2017. Their great strength is being able to process an entire text in parallel thanks to the attention mechanism, unlike previous networks (RNN, LSTM) which advanced word by word.

- The model’s internal dimension ($d_{model}$): Inside these distillers, information is processed in a very high-dimensional “space.” This is the width of the neural layers. The larger this dimension, the more the model can manipulate and understand complex nuances and relationships between words.

- Impact: This architecture revolutionized language processing (and even image processing), allowing models to capture very complex semantic relationships on long texts.

- Analogy: Imagine a sophisticated battery of stills (distillers), capable of extracting the most subtle “meaning molecules.” The model’s internal dimension is like the complexity of these stills’ internal circuits.

Token / Piece

Technical definition: A token is the basic unit processed by an LLM. It’s not always a complete word: it can be a word, a piece of word (“choco” + “late”) or a character.

- To know: The rarer or longer a word is, the more likely it is to be cut into several tokens. A typical French novel is about 700,000 tokens.

- Order of magnitude: GPT-3 has a “context window” of 2,048 to 4,096 tokens. GPT-4 goes up to 32,000, or more.

Embedding (Digital marinade)

Technical definition: An embedding is a vector representation of a token. Each token is translated into a long list of numbers (a “vector”) that summarizes its semantic facets. The closer two words’ vectors are in this digital space, the more similar their meanings are.

- Order of magnitude: GPT-3/4 models use embeddings of 1,536 or 3,072 dimensions.

- Analogy: It’s the marinade that captures all the flavors of an ingredient. Each number in the vector is a “note” (aroma, texture, etc.).

Batch / Micro-batch oven

Technical definition: A batch is a set of text sequences processed simultaneously during training to optimize hardware usage (GPU/TPU).

- Typical size: from 32 to 2,048 sequences.

- Analogy: A batch of small cakes baked together to optimize time and energy.

Attention / Semantic flavor marriages

Technical definition: The attention mechanism allows the model to weigh the importance of each word relative to others in a sequence.

- Types of attention:

- Self-attention: each word “looks” at other words in the same sequence to understand the global context.

- Cross-attention: allows one sequence to align with another (e.g., text and image), useful for multimodal or translation.

- Attention weights: A high score indicates a strong semantic link (honey and lemon), a low score indicates the opposite (water and oil).

- Analogy: A chef who tastes every possible combination to keep only perfect harmonies.

Final conclusion

This arbitrary cutting of words into tokens — “chocolate” potentially becoming “choco” + “late” — certainly allows the model to learn to “guess” brilliantly, but it also highlights its fundamental limitation: an LLM has no intimate consciousness of meaning. It manipulates text fragments, not ideas.

At a time when we fantasize about the emergence of “real” artificial intelligence, it’s essential to keep in mind this distinction: the technical feat is real, but the path toward human understanding of language remains, for now, another challenge.

It’s also essential to keep in mind that LLMs allow the human mind to function in new and different modes and environments, and that the emergence of novelty likely lies there.

AiBrain

AiBrain