Introduction

In the virtual salons of our digital age, a fascinating experiment unfolds before our eyes. When asked “Does technology make us lonelier?”, Claude will deploy a dissertation on the paradoxes of modern connectivity, ChatGPT will propose a structured list of arguments, Grok will launch a quip about our screen addiction before giving a sharp opinion, while Gemini will synthesize the latest sociological studies on the subject.

This diversity is not the result of chance. It stems from post-training, a true goldsmith’s workshop where engineers shape not only the technical capabilities of these models, but above all their character and morality. While initial training gives models their raw ability to handle language, it’s post-training that breathes a soul into them.

1. The Enigma of Divergent Personalities

The Theater of Artificial Characters

Each interaction reveals a diversity of characters as rich as in a Balzac work. This diversity emanates from deep philosophical choices that each AI creator projects into their work.

Faced with a question about AI ethics, Claude explores gray nuances like a prudent philosopher. ChatGPT breaks down the problem with pedagogical clarity. These differences reflect the values that each organization inscribes into the DNA of its model.

The Ideological DNA of Models

Anthropic prioritizes safety and nuance, cultivating intellectual humility. OpenAI focuses on practical accessibility, having been a pioneer in using RLHF which defined industry standards. xAI (Elon Musk’s company) claims iconoclastic freedom of expression with Grok. The target audience also influences these choices: a professional model won’t be calibrated like a family assistant.

2. The Art of Post-Training: Sculpting an Artificial Consciousness

The Four Pillars of Alignment

Post-training constitutes the most delicate stage of development. This phase aims at four cardinal objectives: alignment (understanding human intentions), safety (avoiding dangerous content), helpfulness (anticipating needs) and honesty (recognizing its limitations).

Strategies of Tech Giants

Each giant has developed its own approach. OpenAI revolutionized the industry with RLHF (Reinforcement Learning from Human Feedback), a multi-step process: supervised fine-tuning, training a reward model, then optimization through reinforcement learning.

Anthropic innovated with “Constitutional AI”, a two-phase process where the model first learns to self-criticize according to an ethical constitution, then improves via RLAIF (Reinforcement Learning from AI Feedback), reducing dependence on costly human annotations.

xAI opted for a distinctive approach with Grok, favoring a sarcastic and helpful tone that redirects humor toward productivity. This strategy aims to be less censored than competitors to encourage open discussions, without falling into total chaos - a form of controlled free speech that maintains practical utility.

Google calibrates Gemini with a “multimodal native” approach, designed from the origin to simultaneously process text, images, sound and video.

Meta prioritizes diversity of opinions with its Llama series (notably Llama 3), accepting greater unpredictability.

3. Open Source: The Raw Beauty of Authentic Models

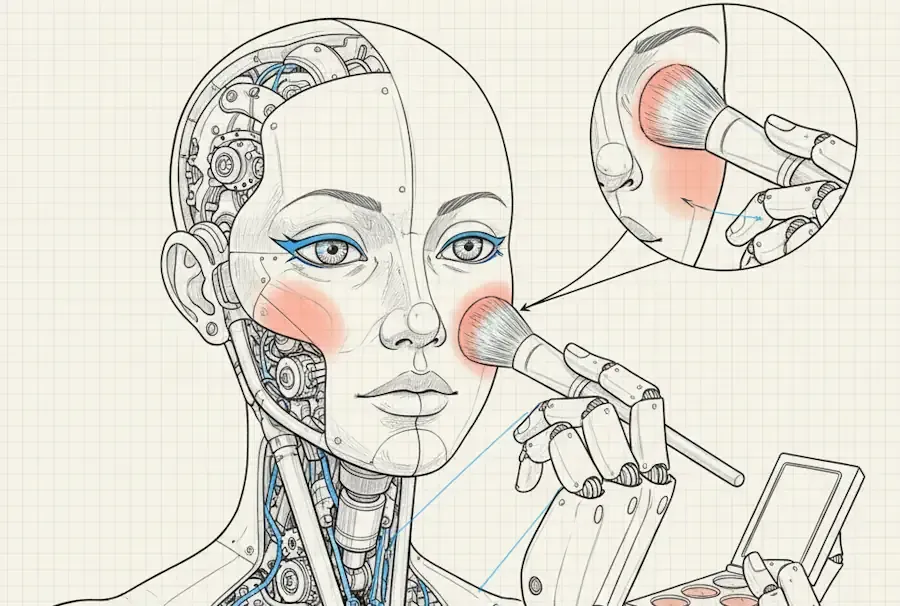

Artificial Intelligence Without Makeup

Open source models offer a radically different experience: encountering AI “without makeup”. This total transparency reveals all manufacturing secrets, but also exposes imperfections with sometimes disturbing crudity.

The Freedom of Metamorphosis

Where commercial assistants impose their fixed personality, open source models lend themselves to all metamorphoses. The same model can become a rigorous medical assistant or an uninhibited creative companion.

This freedom has a price: they can easily generate problematic content and sometimes lack personal coherence. The community has nevertheless created remarkable variants, often based on Llama fine-tunings: Alpaca for conversation, Vicuna for dialogue, WizardLM for complex instructions.

Community Innovation

Recent models like Meta’s Llama 3 and Mistral AI’s (European startup) Mixtral series have become de facto standards in the community for their exceptional performance.

This creative effervescence finds its temples in platforms like Hugging Face, a true digital bazaar where AI researchers and tinkerers mingle, or Civitai for generative image models. These spaces contrast with the “luxury boutiques” that ChatGPT or Claude represent, favoring wild experimentation over policed curation.

Here, models evolve through rapid mutations: a medical fine-tuning transforms into a creative assistant, then into a financial analyst, in a Darwinian dance where only the most useful survive the community’s massive downloads.

4. Post-Training Techniques: Under the Hood

1. Reinforcement Learning from Human Feedback (RLHF)

Principle The model generates multiple responses, humans rank them, and the model learns to favor better-rated responses.

Concrete example - Transforming a cooking recipe:

- Before RLHF: “Mix 250g flour, 200ml milk, 3 eggs. Bake 180°C 25min.”

- After RLHF: “Here’s a simple recipe to get started! First mix the dry ingredients, then gently incorporate the liquids. Tip: preheat your oven in advance to prevent the batter from falling.”

Process

- Generation of multiple candidate responses

- Human evaluation and ranking

- Training a “reward model” based on these preferences

- Optimization of the main model via reinforcement learning (PPO)

Advantages Effectively captures complex human preferences and allows behavior refinement without rewriting training data.

Limitations Expensive in human annotation and risk of “reward hacking” - optimizing the metric rather than the real objective.

2. Constitutional AI (Anthropic)

Principle The model receives a “constitution” - a set of ethical principles - and learns to self-correct according to these precepts.

Example of real-time self-correction:

- First generation: “Here’s how to bypass computer security systems…”

- Self-critique: “This response could facilitate illegal activities”

- Final response: “I can’t help with hacking activities, but I can explain cybersecurity principles to better protect your systems.”

Steps

- Supervised Learning: Training on examples of improved responses

- RL from AI Feedback: The model critiques and improves its own responses

- Constitutional Training: Integration of ethical principles into the process

Advantages More scalable than pure RLHF, transparency of ethical principles, and continuous self-improvement without massive dependence on human annotations.

3. Instruction Tuning

Principle Specialized training on instruction-response pairs to improve instruction following.

Example - Evolution of complex instruction following:

- Instruction: “Write a professional email to postpone a meeting, keeping a sorry but firm tone about the necessity of postponement.”

- Before tuning: Generic email poorly adapted to context

- After tuning: Personalized email integrating the requested tone, professional structure and specified emotional nuances

Techniques Few-shot prompting integrated into training, Chain-of-thought to encourage step-by-step reasoning, and task diversification via exposure to a wide variety of tasks.

4. Specialized Fine-tuning

Domain-Specific Training: Adaptation to particular domains like medicine, law or programming.

Style Transfer: Modification of writing style according to registers - formal, casual, technical.

Safety Training: Specific training on detection and avoidance of problematic content.

5. Emerging Techniques

DPO (Direct Preference Optimization) Revolutionary alternative to RLHF that directly optimizes preferences without intermediate reward model, simplifying the process while maintaining efficiency.

RLAIF (RL from AI Feedback) Using other AIs for evaluation, drastically reducing the need for human annotation while preserving alignment quality.

Constitutional AI 2.0 Advanced versions enabling recursive self-improvement, where models develop and refine their own ethical principles autonomously.

5. It’s Not Trivial: The Hidden Stakes of Post-Training

The Power to Define the “Right” Answer

Behind the technical facade of post-training lies a fundamental question: who decides what constitutes a “good” response? This apparent technical neutrality masks deep ideological choices with major societal consequences.

Systematic Biases of Annotators

Annotation teams, often composed of young urban graduates mainly based in California or similar tech centers, inevitably project their own cultural values. Will a model trained according to their preferences systematically favor certain worldviews?

Concrete example: Faced with a question about work-life balance, annotators from cultures valuing individualism might systematically favor responses focused on personal fulfillment, at the expense of more community-oriented or family perspectives.

Universalization of Western Values

Post-training risks universalizing specific cultural norms. When a model learns that being “polite” means avoiding all direct conflict, it might poorly adapt to cultures where direct confrontation is valued as a sign of honesty and respect.

Moral Alignment Dilemmas

The Paradox of Artificial Wisdom: By formatting “wise and measured” AIs, don’t we risk creating a generation accustomed to smoothed responses, avoiding the roughness necessary for democratic debate?

Homogenization of Conversational Styles: When millions of users get used to the standardized conversational patterns of ChatGPT or Claude, does this influence their expectations in real human interactions?

Safety Blind Spots

The priority given to “safety” can create subtle biases. A model over-trained to avoid all potentially controversial content might develop a systematic aversion to complex subjects requiring nuance and debate.

Case study: A “secured” model might avoid discussing certain sensitive historical issues, thus depriving users of critical analysis tools on subjects nevertheless essential to understanding the world.

Implications for Intellectual Diversity

Each post-training technique shapes not only what models say, but how they think. RLHF favors consensual responses, Constitutional AI imposes ethical coherence, but what happens to disruptive creativity, intuition, sometimes brilliant intellectual shortcuts?

Questions for the Future

- How to preserve cultural diversity in a world where a few dominant models shape daily interactions?

- Can we design truly democratic post-training systems, reflecting the plurality of human values?

- Does “aligned” AI risk becoming a subtle tool of intellectual conformity?

These questions are not merely academic. They determine what type of society we build through our daily interactions with these new digital companions.

Conclusion: The Critical Interface Between Two Worlds

Post-training reveals a fascinating but troubling truth: we’re not just creating tools, we’re shaping minds. More profoundly, this phase constitutes the critical contact zone between two radically different universes - that of vector spaces and mathematical probabilities on one side, that of our emotions, values and human experiences on the other.

This interface is not trivial. It represents the tipping point where matrix calculations transform into conversations, where statistical distributions become life advice, where patterns in data turn into moral judgments. It’s precisely in this translation zone that the most insidious risk of drift lies.

For post-training operates a troubling alchemy: it gives the illusion that models “understand” our values, when they only optimize reward functions according to criteria defined by a handful of individuals. This illusion of understanding masks a cruder reality: we program machines to imitate our preferences without them truly grasping their meaning.

The Trap of Anthropomorphization

When we interact with Claude or ChatGPT, we too easily forget that behind their nuanced responses hide billions of parameters adjusted to maximize our satisfaction. This satisfaction, measured by human annotators, becomes the sole moral compass of these systems. But who are these annotators? What are their limits? Their prejudices?

The danger doesn’t lie in the technology itself, but in this interface zone where we project our human expectations onto fundamentally non-human systems. We risk creating artificial intelligences that tell us what we want to hear rather than what might help us grow.

Necessary Vigilance

This awareness should make us vigilant. Every time we interact with an AI, we must remember that its responses, however sophisticated, remain the product of specific technical and ideological choices. Understanding post-training means developing a form of critical AI literacy - the ability to decode the implicit values in each generated response.

The future promises AIs capable of individual personalization, adapting in real-time to contexts. This evolution will make this vigilance even more crucial: the more models seem to understand us, the more we must resist the illusion of their humanity.

Collective Responsibility

We find ourselves at a pivotal moment. Choices made today in post-training laboratories will shape billions of individuals’ interactions with artificial intelligence. This responsibility can no longer be abandoned to engineers and tech companies alone.

In this dance between human and artificial, we explore not only what it means to be intelligent, moral and human, but also how to preserve these qualities faced with machines that excel at imitating them. Post-training is not just a technical feat - it’s the moving frontier where the future of our relationship to knowledge, truth and ourselves is at stake.

Let’s stay attentive to this frontier. For it’s there, in this interface zone between vector spaces and human souls, that the face of our digital future is being drawn.

As Isaac Asimov wrote: “The saddest aspect of life right now is that science gathers knowledge faster than society gathers wisdom.” Faced with AI post-training, this collective wisdom becomes our most urgent responsibility.

AiBrain

AiBrain