Why “review this code” and “review this code for security” don’t yield the same results

Executive Summary

Observation: The same code with an obvious security flaw (e.g., SQL injection) can be detected or ignored depending on how the prompt is formulated. “Review this code” may miss the flaw, while “Review this code for security” systematically detects it.

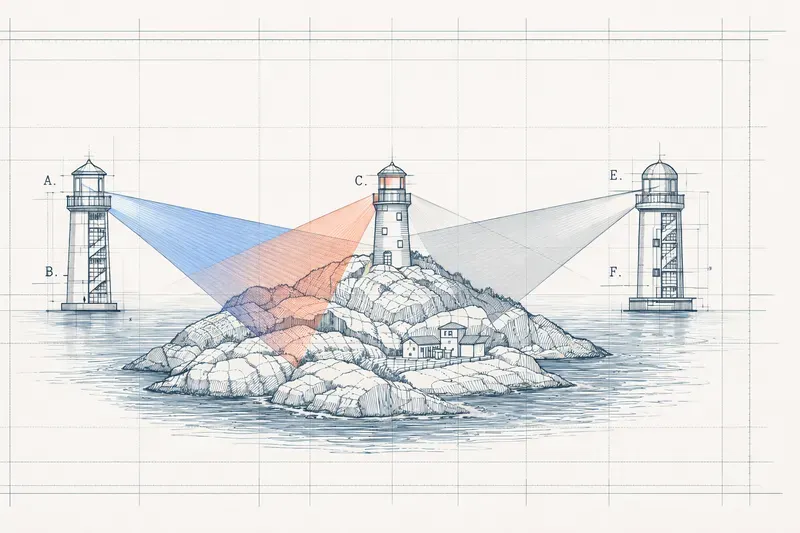

Central hypothesis: The prompt doesn’t just change what we ask for, it guides where the model looks. In the transformer architecture, the multi-head attention mechanism allows observing the same input from multiple angles. The prompt functions as a gaze orientation that guides exploration rather than a simple filter.

Recent research: Work on reasoning models (DeepSeek-R1, QwQ-32B) shows that performance emerges from internal diversification of perspectives — a “society of thought” that naturally emerges when optimizing for accuracy.

Implication for agent design: The real question isn’t “how many agents?” but “how many distinct perspectives?”. A well-designed multi-agent system is a set over the space of perspectives, not cosmetic parallelism.

Conclusion: An LLM is not an oracle, it’s a biased explorer. The prompt is an exploration bias. Truth is not a point, it’s a silhouette that only appears when enough projections overlap.

Glossary

- Multi-head attention

- core mechanism of Transformers that allows observing the same input from multiple angles simultaneously. Each attention “head” captures different relationships: syntactic, semantic, structural, contextual.

- Transformer

- neural network architecture (at the heart of LLMs) combining multi-head attention and feed-forward layers, used to process token sequences.

- Prompt engineering

- art and science of formulating instructions to guide an LLM’s behavior. A good prompt guides exploration rather than simply describing the task.

- Attention routing (attention orientation)

- concept that the prompt guides where the model looks in its knowledge space, rather than filtering afterward.

- Society of Thought

- phenomenon observed in reasoning models where performance emerges from internal diversification of perspectives — the model simulates internal dialogues between different analysis angles.

- KV cache

- mechanism that allows reusing computations in LLMs, optimizing efficiency but potentially anchoring the model in its initial trajectory.

- Homogeneous multi-agent

- system using the same model with different prompts, creating value through trajectory sampling rather than parallelism.

- Heterogeneous multi-agent

- system using different models, benefiting from different biases, different blind spots, and different cognitive styles.

- OneFlow

- approach showing that a single agent in multi-turn can match multi-agent systems on some benchmarks, but with a structural blind spot: the same mechanism that optimizes efficiency anchors the model in its initial trajectory.

The Disturbing Observation

Take a piece of code with an obvious security flaw — an SQL injection, for example. Ask Claude or GPT:

“Review this code”

You get comments on readability, structure, sometimes naming conventions. The flaw? Sometimes mentioned, sometimes not.

Same code, same model:

“Review this code for security vulnerabilities”

The flaw appears first. With details. And other issues the first review didn’t catch.

Yet, in both cases, we’re “reviewing” the same code.

Security should be a primary concern in a good review.

Why doesn’t the model see it systematically?

The Prompt Isn’t an Instruction, It’s a Spotlight

My hypothesis: when you change the prompt, you’re not just changing what you’re asking for. You’re changing where the model looks.

In the transformer architecture, the multi-head attention mechanism allows the model to observe the same input from multiple angles. Each head captures different relationships: syntactic, semantic, structural, contextual.

The prompt functions as a gaze orientation:

- “Review this code” orients toward general quality patterns (readability, structure, conventions).

- “Review this code for security” orients toward threat patterns (input validation, trust boundaries, authentication flows, attack surfaces).

⚠️ This isn’t a mechanistic causal link between prompt and attention heads, but a semantic bridge: in both cases, we find the same fundamental principle — multiplying viewpoints as a comprehension strategy.

The model doesn’t “see” everything then filter afterward.

It explores from a given angle.

What Recent Research Confirms

Society of Thought: Diversity Emerges Naturally

Work on reasoning models (DeepSeek-R1, QwQ-32B, etc.) shows that performance doesn’t come from a single linear reasoning, but from internal diversification of perspectives.

Models simulate internal dialogues between different analysis angles — a “society of thought.”

This behavior isn’t programmed:

it emerges when optimizing for accuracy.

The system learns that diversifying angles improves result quality.

OneFlow: The Limits of the Single Agent

OneFlow shows that a single agent in multi-turn can match multi-agent systems on several benchmarks.

Main argument:

- KV cache allows reusing computations,

- efficiency is higher,

- coordination is simpler.

But there’s a structural blind spot:

The same mechanism that optimizes efficiency anchors the model in its initial trajectory.

When the same agent successively plays writer then critic, it remains trapped in its own path.

A truly external critic, without access to the generation context, starts from a different cognitive starting point.

Benchmarks measure answer correctness.

They don’t measure coverage of the space of possible problems.

The Implication for Agent Design

The real question isn’t:

how many agents?

But:

how many distinct perspectives?

Homogeneous Multi-Agent

Same model, different prompts:

- value created by trajectory sampling

- not by parallelism

Heterogeneous Multi-Agent

Different models:

- different biases

- different blind spots

- different cognitive styles

Claude doesn’t miss the same things as GPT.

A well-designed multi-agent system isn’t cosmetic parallelism.

It’s a set over the space of perspectives.

When It Matters, When It Doesn’t

| Task Type | Nature | Optimal Strategy |

|---|---|---|

| Factual question | Convergent | Single agent |

| Deterministic calculation | Convergent | Single agent |

| Data transformation | Convergent | Single agent |

| Code review | Exploratory | Multi-perspectives |

| Risk analysis | Exploratory | Multi-perspectives |

| Security | Exploratory | Multi-perspectives |

| Creation | Exploratory | Multi-perspectives |

| Anomaly detection | Exploratory | Multi-perspectives |

Prompt Engineering = Attention Routing (Conceptually)

A good prompt doesn’t just describe the task.

It guides the search.

“You are a security expert” isn’t cosmetic roleplay.

It’s a perspective instruction:

search in security reasoning patterns,

not in general patterns.

Personas, system prompts, roles, instructions:

- don’t serve to theatricalize,

- serve to orient the explored space.

Epistemology

| Level | Mechanism |

|---|---|

| Architecture | Multi-head attention = plurality of gazes |

| Inference | Prompt = exploration orientation |

| Agent design | Multi-agent = trajectory diversification |

| Epistemology | No global truth, only projections |

Nietzsche wrote that there is no absolute truth, only perspectives.

Transformers have integrated this principle, not as a philosophical thesis, but as an engineering constraint:

multiply viewpoints rather than seeking a unique representation.

Perhaps the best agent design isn’t one that seeks the right answer,

but one that multiplies angles,

until a stable form emerges from their overlaps.

Conclusion

An LLM is not an oracle.

It’s a biased explorer.

The prompt is an exploration bias.

Multi-agent is a coverage strategy.

Truth is not a point.

It’s a silhouette that only appears when enough projections overlap.

Related articles:

References

- Reasoning Models Generate Societies of Thought

https://arxiv.org/abs/2601.10825?utm_source=substack&utm_medium=email - Rethinking the Value of Multi-Agent Workflow: A Strong Single Agent Baseline (OneFlow)

- https://arxiv.org/abs/2601.12307?utm_source=substack&utm_medium=email

AiBrain

AiBrain