Simple or complex, adapted to your needs.

Related articles: AI Agent Design Guide | 11 Multi-Agent Orchestration Patterns | Agent Skills: The Onboarding Manual

Introduction

Developing a high-performance AI agent is like building a house: you can’t just stack materials randomly. Each component has its role, strengths, and limitations. After more than 20 years of software development and extensive experimentation with AI, I’ve learned that a robust agent rests on six fundamental pillars: the languages and APIs that make it work, the orchestration that coordinates its actions, the models that give it intelligence, the telemetry that allows understanding its behavior, the storage that preserves its memory, and the runtime that determines where and how it executes.

This article guides you through these six domains, presenting the available tools. No marketing, just concrete experience feedback. Note: Non-exhaustive list.

1. Languages & API: The Foundations of Code

The choice of language and API frameworks determines development velocity, maintainability, and your agent’s performance. Here’s my toolkit.

Python {#python}

Description: Python is the reference language for AI, with the richest ecosystem of machine learning and data processing libraries.

www.python.orgAdvantages:

- Unmatched ML ecosystem (PyTorch, TensorFlow, scikit-learn, Hugging Face)

- Clear and expressive syntax, ideal for rapid prototyping

- Massive community and exhaustive documentation

- Native integration with Jupyter for experimentation

- Excellent notebook management for research

When to use: For complex data pipelines, model training, analysis scripts, and when you need Python’s scientific ecosystem.

TypeScript {#typescript}

Description: TypeScript is my language of choice for production agents. JavaScript with static typing, offering robustness and excellent developer experience.

www.typescriptlang.orgAdvantages:

- Static typing that eliminates an entire class of bugs

- Huge npm ecosystem for any integration

- Excellent performance with Node.js for APIs

- Native async/await and parallelization support, perfect for agents

- Code shared between frontend and backend

- Exceptional development tooling (VSCode, ESLint, Prettier)

FastAPI {#fastapi}

Description: FastAPI is a modern Python framework for creating ultra-fast RESTful APIs with automatic validation and interactive documentation.

fastapi.tiangolo.comAdvantages:

- Performance close to Node.js thanks to Starlette and Pydantic

- Automatic data validation via Python type hints

- Auto-generated OpenAPI documentation (Swagger UI)

- Native async/await support for high concurrency

- Strong typing with excellent developer experience

- Simple deployment and easy horizontal scaling

Fastify {#fastify}

Description: Fastify is an ultra-performant Node.js web framework, focused on speed and low resource costs.

www.fastify.ioAdvantages:

- The fastest Node.js framework (consistent benchmarks)

- Architecture based on modular plugins

- Built-in JSON schema validation (Ajv)

- Low memory footprint

- Built-in structured logging

- TypeScript first-class citizen

Webhooks {#webhooks}

Description: Event-driven architecture for communicating between systems asynchronously and in a decoupled manner.

en.wikipedia.org/wiki/WebhookAdvantages:

- Asynchronous communication without constant polling

- Strong decoupling between systems

- Natural scalability (push vs pull)

- De facto standard for SaaS integrations

- Facilitates event-driven architecture

- Simplified debugging with tools like ngrok or webhook.site

MCP (Model Context Protocol) / Tools {#mcp-model-context-protocol}

Description: MCP (Model Context Protocol) is an emerging protocol to standardize interaction between LLM models and external tools, developed by Anthropic.

modelcontextprotocol.ioAdvantages:

- Open standard for tool integration with LLMs

- Decoupling between business logic and model invocation

- Standardized context and memory management

- Facilitates creation of modular agents

- Interoperability between different models and providers

2. Orchestration: The Conductor of Your Agents

Orchestration determines how your agents coordinate their actions, manage complex workflows, and maintain coherence. It’s the brain of your multi-agent system.

N8N {#n8n}

Description: N8N is a no-code/low-code automation platform for creating visual workflows connecting hundreds of services.

n8n.ioAdvantages:

- Intuitive visual interface for building workflows

- 400+ native integrations (APIs, databases, cloud services)

- Self-hosted: total control of your data

- Conditional logic and loops for complex workflows

- Custom JavaScript execution for maximum flexibility

- Native incoming/outgoing webhooks

- Error handling and automatic retry

LangChain {#langchain}

Description: LangChain is a Python/TypeScript framework for developing LLM-driven applications with prompt chains and agents.

www.langchain.comAdvantages:

- Powerful abstractions for prompt chains

- Integration with dozens of LLMs (OpenAI, Anthropic, Cohere, etc.)

- Library of tools and loaders (documents, web, APIs)

- Support for conversational memory

- Autonomous agents with ReAct and other patterns

- Large community and numerous examples

LangGraph {#langgraph}

Description: LangGraph is a LangChain extension for creating agents with workflows as directed graphs, offering fine control over execution flows.

langchain-ai.github.io/langgraphAdvantages:

- Visual modeling of agent workflows as graphs

- Complex state management between nodes

- Loops, conditionals, and multiple branches

- Easier debugging thanks to explicit structure

- Workflow persistence and resumption

- Perfect for coordinated multi-agent systems

CrewAI {#crewai}

Description: CrewAI is a Python framework for creating collaborative AI agent teams with defined roles, objectives, and processes.

www.crewai.comAdvantages:

- “Team” paradigm: each agent has a specific role

- Automatic orchestration of collaboration between agents

- Task delegation and agent hierarchy

- Configurable sequential or parallel processes

- Shared memory between agents

- Native integration with LangChain

Pydantic AI {#pydantic-ai}

Description: Pydantic AI is a lightweight framework that uses Pydantic for data validation and structuring LLM outputs.

ai.pydantic.devAdvantages:

- Strict validation of inputs/outputs via Pydantic schemas

- Strong typing to reduce agent errors

- Automatic JSON ↔ Python object conversion

- Natural integration with FastAPI

- Structured prompt generation

- Lightweight with no heavy dependencies

Custom Orchestration {#sur-mesure-custom-orchestration}

Description: Development of a custom orchestration system, tailored exactly to your specific needs. This is the approach of my Poulpikan framework.

Advantages:

- Total control over orchestration logic

- Zero unnecessary abstraction: optimal performance

- Architecture adapted to your business domain

- No dependency on third-party frameworks

- Free evolution according to your needs

- Minimal learning curve for your team

- Custom debugging and observability

3. Models: The Intelligence of Your Agents

The model you choose determines your agent’s cognitive capabilities. Each model has its strengths: some excel at reasoning, others at speed or visual understanding.

LLM (Large Language Models) {#llm-large-language-models}

Description: Generative language models capable of understanding and generating human text. The foundation of any conversational agent.

Advantages:

- Deep contextual understanding of natural language

- Coherent and creative text generation

- Reasoning and problem solving

- Few-shot learning: learns from few examples

- Multilingual without specific training

Vision {#vision}

Description: Models capable of analyzing and understanding images, describing them, or extracting structured information from them.

Advantages:

- Image analysis without specific training

- Object detection, text (OCR), scene detection

- Detailed description generation

- Image classification

- Data extraction from visual documents (invoices, diagrams)

Embedding {#embedding}

Description: Models that transform text into numerical vectors capturing semantic meaning, essential for search and similarity comparison.

Advantages:

- Semantic search (beyond keywords)

- Document clustering and classification

- Similarity and duplicate detection

- Foundation of RAG (Retrieval-Augmented Generation)

- High performance for low cost

- Deterministic: same text = same embedding

Image Generation {#image-generation}

Description: Generative models capable of creating images from textual descriptions (text-to-image).

Advantages:

- Visual creation without a designer

- Rapid iteration on visual concepts

- Various artistic styles

- Variation generation from existing images

- Integration into creative workflows

Audio {#audio}

Description: Models for transcription (speech-to-text), voice synthesis (text-to-speech), and audio analysis.

Advantages:

- Accurate multilingual transcription

- Natural and expressive voice synthesis

- Automatic audio translation

- Automatic subtitling

- Voice interfaces for agents

Reranker {#reranker}

Description: Specialized models for reordering search results according to their actual relevance to a query, drastically improving RAG system quality.

Advantages:

- Superior accuracy to embeddings alone

- Significantly improves RAG results

- Understands fine semantic nuances

- Eliminates non-relevant but lexically close results

- Low cost in post-processing

4. Telemetry: Observing to Understand and Improve

Without telemetry, your agent is a black box. Measuring, tracing, and analyzing are essential for debugging, optimizing, and monitoring in production.

LangSmith {#langsmith}

Description: LangSmith is a development and monitoring platform for LLM applications, by the creators of LangChain.

www.langchain.com/langsmithAdvantages:

- Complete tracing of LangChain chains

- Visualization of prompts and responses at each step

- Automatic cost calculation (tokens, API calls)

- Easier debugging with session replay

- Test datasets and automated evaluation

- Production monitoring and alerts

- Human annotations for continuous improvement

Langfuse {#langfuse}

Description: Langfuse is an open-source alternative to LangSmith, offering observability and analytics for LLM applications, with self-hosted option.

langfuse.comAdvantages:

- Open-source: total control and no vendor lock-in

- Self-hosted possible: sensitive data stays with you

- Multi-turn session tracing

- Detailed performance and cost metrics

- Integration with LangChain, LlamaIndex and direct APIs

- Real-time monitoring dashboard

- Data export for custom analyses

Phoenix {#phoenix}

Description: Phoenix is an open-source ML observability platform specialized in tracing and evaluating LLM and embedding systems.

arize.com/phoenixAdvantages:

- Embedding visualization and drift detection

- Retrieval quality analysis (RAG)

- Hallucination and toxicity detection

- Notebook-friendly interface (Jupyter)

- Lightweight and quick to deploy

- Focus on qualitative evaluation

5. Storage: The Memory of Your Agents

An agent without memory cannot learn or maintain context between sessions. Storage choice impacts performance, scalability, and your agent’s capabilities.

PostgreSQL {#postgresql}

Description: PostgreSQL is a robust, proven open-source relational database, with pgvector extension for vector storage.

www.postgresql.orgAdvantages:

- Unmatched reliability and maturity (30+ years)

- Full ACID: guaranteed consistency

- pgvector: native embedding storage and search

- Excellent performance with appropriate indexing

- Rich tooling ecosystem (backups, monitoring, migrations)

- JSON support for flexibility

- Self-hosted or managed (AWS RDS, Supabase, etc.)

pgvector {#pgvector}

Description: pgvector is a PostgreSQL extension allowing efficient storage and search of embedding vectors directly in Postgres.

github.com/pgvector/pgvectorAdvantages:

- Everything in one database: vectors + metadata

- Fast similarity search (HNSW, IVFFlat)

- Combined filtering: semantic + classic attributes

- No need for separate vector database

- ACID transactions even for vectors

- Reduced infrastructure cost

ChromaDB {#chromadb}

Description: ChromaDB is a lightweight open-source vector database, specifically designed for embeddings and similarity search.

www.trychroma.comAdvantages:

- Ultra-simple setup:

pip install chromadb - Embedded mode: no separate server needed

- Intuitive Python API

- Rich metadata on each vector

- Combined semantic + metadata filtering

- Lightweight in resources

- Perfect for prototyping and experimentation

Pinecone {#pinecone}

Description: Pinecone is a managed vector database, optimized for similarity search at very large scale.

www.pinecone.ioAdvantages:

- Managed: zero ops, automatic scalability

- Excellent performance at very large scale (billions of vectors)

- Low latency (<100ms) even on large volumes

- Sophisticated metadata filtering

- Namespaces for data isolation

- Simple APIs and multiple SDKs

- Built-in monitoring and analytics

Supabase {#supabase}

Description: Supabase is an open-source alternative to Firebase, based on PostgreSQL, offering database, auth, storage and auto-generated APIs.

supabase.comAdvantages:

- PostgreSQL + pgvector: relational power + vectors

- Integrated authentication and authorization

- Auto-generated REST and GraphQL APIs

- S3-compatible file storage

- Realtime subscriptions (WebSocket)

- Complete admin dashboard

- Self-hosted or managed

- Free up to substantial volumes

SQLite {#sqlite}

Description: SQLite is an embedded, lightweight, serverless SQL database, stored in a simple file.

www.sqlite.orgAdvantages:

- Ultra-lightweight: single library, single file

- Zero configuration: no server to manage

- Excellent read performance

- Full ACID transactions

- Portable: copy the file = migrate the database

- Perfect for edge computing and local applications

- Vector support via extensions (sqlite-vss)

6. Runtime & Hosting: Where the Agent’s Heart Beats

The choice of inference infrastructure is the ultimate compromise between latency, cost, and sovereignty. It’s often the overlooked point during design, but it determines the final user experience and your monthly bill.

OpenRouter {#openrouter}

OpenRouter

Description: OpenRouter is a unified API giving access to almost all models on the market (OpenAI, Anthropic, but also Groq, Together, and dozens of others).

openrouter.aiAdvantages:

- Single API for 150+ models

- Single account, single billing

- Switch models by changing a parameter

- Automatic failover between providers

- Real-time price comparison

- Perfect for multi-model experimentation

- Avoids total vendor lock-in

Local + Open Source Hosting {#local-open-source-hosting}

For those who want to keep total control of their infrastructure and data, these solutions allow you to host your own open-source models.

Together AI {#together-ai}

Description: Together AI is a cloud inference platform specialized in open-source models, with an excellent performance/price ratio.

www.together.aiAdvantages:

- Large catalog of open-source models (Llama 3, Qwen, Mistral, etc.)

- Infrastructure optimized for production

- Automatic scaling and high reliability

- Attractive prices, often 2-5x cheaper than OpenAI

- Fine-tuning facilitated on their clusters

- Support for niche models (code, multimodal, etc.)

Fireworks AI {#fireworks-ai}

Description: Fireworks AI is a fast and economical inference platform for open-source models, with focus on speed and fine-tuning.

fireworks.aiAdvantages:

- Excellent performance (200-400 tokens/s)

- Simple and fast fine-tuning

- OpenAI-compatible API: easy migration

- Very competitive prices

- Low latency and high uptime

- Good documentation and SDKs

DeepInfra {#deepinfra}

Description: DeepInfra is an economical inference service for open-source models, optimized for large volumes.

deepinfra.comAdvantages:

- Ultra-aggressive pricing: perfect for large volumes

- Large choice of open-source models

- Pay-as-you-go without commitment

- Automatic scaling

- Good for low-cost experimentation

Groq {#groq}

Groq

Description: Groq is an ultra-fast inference infrastructure based on LPUs (Language Processing Units), optimized specifically for transformers.

groq.comAdvantages:

- Unmatched latency: 500-1000 tokens/second

- Quasi-instantaneous time-to-first-token (<50ms)

- Perfect for real-time voice interactions

- Support for Llama, Mixtral, Gemma models

- Competitive pricing despite performance

- “Magical” user experience: zero perceptible wait time

French Providers {#french-providers}

For projects requiring GDPR compliance, data sovereignty, and French support, these French players offer robust solutions.

Scaleway (Generative APIs) {#scaleway}

Description: Scaleway Generative APIs is a robust French offering for deploying open-source models on sovereign infrastructure with transparent pricing.

www.scaleway.com/en/aiAdvantages:

- Data hosted in France (Paris, Amsterdam)

- Native GDPR compliance

- Llama, Mistral, and other open-source models

- Clear and predictable pricing

- French support

- Integration with Scaleway ecosystem (compute, storage, etc.)

- Excellent for public markets and regulated sectors

OVHcloud (AI Endpoints) {#ovhcloud}

Description: OVHcloud AI Endpoints is an inference service from the European cloud leader, ideal for infrastructures already on OVH.

www.ovhcloud.com/en/public-cloud/ai-machine-learningAdvantages:

- 100% European hosting (France, Germany, UK)

- Deep integration with existing OVH services

- Competitive prices with pricing commitment

- Various open-source models

- Quality technical support

- ISO27001 certification, HDS for healthcare

Mistral AI (The Platform) {#mistral-ai}

Description: Mistral AI offers direct access to models from the French AI gem, with European hosting options.

mistral.aiAdvantages:

- Mistral Large, Medium, Small models: excellent performance

- Large context windows (32k-128k tokens)

- European hosting option

- Competitive prices vs OpenAI

- Quality French language support

- Dynamic product roadmap

Big Hyperscalers {#big-hyperscalers}

The choice of “Enterprise” security, deep integration, and professional SLA guarantees for large organizations.

AWS Bedrock {#aws-bedrock}

Description: AWS Bedrock is a managed inference service from Amazon, with focus on security and data isolation.

aws.amazon.com/bedrockAdvantages:

- Data never leaves your AWS VPC

- Enterprise security and compliance (SOC2, HIPAA, etc.)

- Access to Claude (Anthropic), Llama, Titan, Jurassic

- Native integration with AWS services (Lambda, S3, etc.)

- No data sharing with model providers

- Built-in guardrails and content filtering

- AWS enterprise support

Google Vertex AI {#vertex-ai}

Description: Google Vertex AI is a complete ML/AI platform from Google, giving access to the Gemini family and advanced ML tools.

cloud.google.com/vertex-aiAdvantages:

- Exclusive access to Gemini (1M+ token context)

- Gigantic context windows: perfect for analyzing entire documents

- Deep integration with GCP (BigQuery, Cloud Storage, etc.)

- Complete MLOps tools (training, tuning, deployment)

- Native multimodality (text, image, video, audio)

- Transparent pricing with possible commitment

Azure OpenAI {#azure-openai}

Description: Azure OpenAI is an OpenAI service hosted on Azure, with Microsoft enterprise guarantees.

azure.microsoft.com/en-us/products/ai-services/openai-serviceAdvantages:

- GPT-4, GPT-4o with enterprise SLA

- Native integration with Microsoft 365, Teams, etc.

- Data isolated in your Azure tenant

- Enterprise compliance (ISO, SOC2, HIPAA)

- Microsoft enterprise-level support

- Perfect for Microsoft-centric organizations

- Fine-tuning on your private data

Cloudflare Workers AI {#cloudflare-workers-ai}

Description: Cloudflare Workers AI is an inference service on Cloudflare’s edge, executing models closest to users.

developers.cloudflare.com/workers-aiAdvantages:

- Execution on the “Edge” (Cloudflare CDN network)

- Minimal network latency: 50-95% of users <50ms

- Small Language Models optimized for edge

- Attractive serverless pricing

- No cold start

- Integration with Workers (serverless functions)

- Global by default: deployed on 300+ data centers

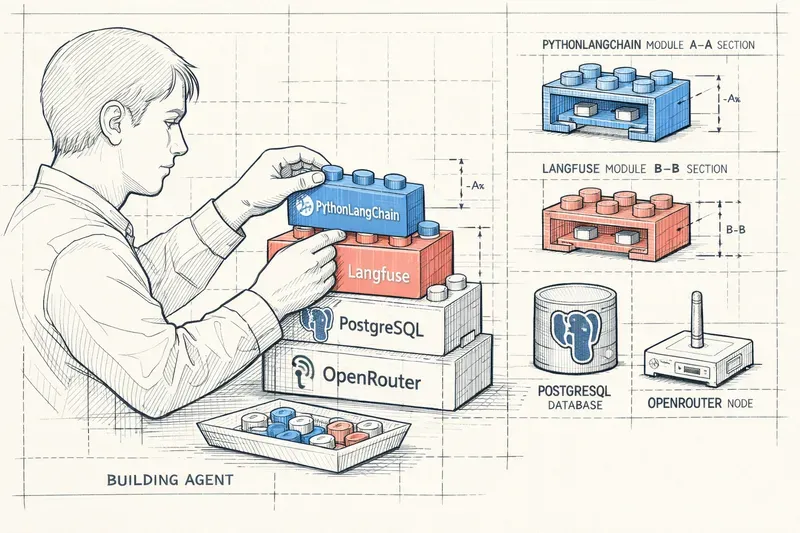

Conclusion: Choose, Assemble, Iterate

Building an agent isn’t about choosing the most hyped technologies, it’s about assembling the right building blocks to solve your specific problem. Each project has its constraints: budget, expected scalability, team skills, data criticality, required development speed.

My advice after hundreds of hours developing agents: start simple. TypeScript + Claude Sonnet 4.5 + PostgreSQL + Langfuse + Groq (or OpenRouter for flexibility) covers 80% of use cases. Then, add complexity only when the need is proven.

Runtime choice is often underestimated: Groq will transform your voice agent’s user experience, Mistral AI + Scaleway will secure your European compliance, AWS Bedrock will reassure your CISO. It’s not a technical detail, it’s a product decision.

And above all, don’t be afraid to write custom code. Frameworks are tools, not dogmas. Sometimes, 200 well-thought lines of TypeScript beat 2000 lines of poorly understood abstractions.

At HeyIntent, this philosophy guides my projects: understand the business need, choose the right building blocks, build robust, measure, iterate. No magic, just engineering.

Need a custom agent for your project? I’m available for technical collaborations. Contact me to discuss.

Further Reading

- AI Agent Design Guide: What Works, What Fails — The golden rule of deterministic feedback and 7 design principles

- Meta-Analysis: Capabilities, Limitations and Patterns of AI Agents — Systematic analysis of 100+ publications with pattern verdicts

- The 11 Multi-Agent Orchestration Patterns — Pipeline, Supervisor, Council, Swarm: which pattern to choose?

- Agent Skills: The Onboarding Manual — Structuring instructions for specialized agents

AiBrain

AiBrain