Related articles: LLM Recipe GPT Style: architecture explained | How multi-head attention helps LLMs understand

Executive Summary

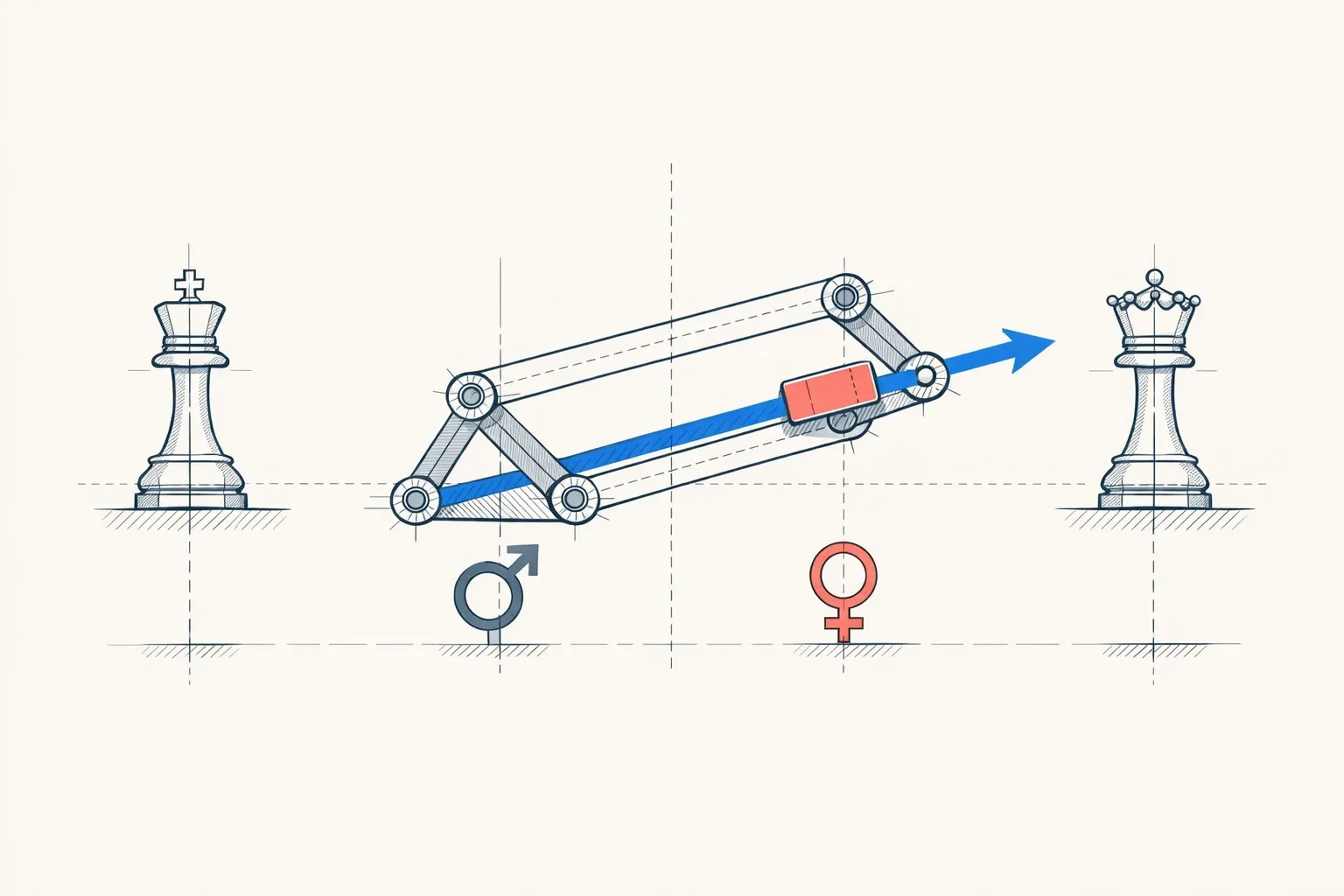

This article demystifies the famous analogy “king - man + woman = queen” by explaining how AI models, through embeddings, represent words as points in a multidimensional vector space. Semantic traits (royalty, gender, etc.) form axes, enabling arithmetic operations that capture linguistic relationships.

Key Points:

- Words → numerical vectors (static embeddings like Word2Vec, contextual in Transformers).

- Vector arithmetic: subtracting/adding modifies traits (e.g., changing gender).

- Machine learning from textual contexts.

- Limitations: pedagogical simplifications, potential biases.

Ideal for understanding the basics of computational semantics without advanced math.

Glossary: Understanding Technical Terms

- Embedding

- Representation of a word (or phrase) as a multidimensional numerical vector capturing its semantic traits. Close vectors correspond to similar meanings.

- Word2Vec

- : Learning algorithm to generate static embeddings by analyzing word contexts in large text corpora.

- Transformer

- Neural network architecture (basis of GPT, BERT) using an attention mechanism to produce contextual embeddings, adaptive to the sentence context.

- Semantic vector

- List of numbers (e.g., [0.9, -0.2, 0.7]) defining a word’s position in the space of meanings.

- Vector analogy

- Operation like king - man + woman that navigates semantic space to find an analogous word (queen).

- Attention mechanism

- Component of transformers that weights the importance of neighboring words to dynamically adjust embeddings.

You may have already seen this strange equation: “king - man + woman = queen”. How can you subtract one word from another? How can artificial intelligence solve math problems… with vocabulary? It’s as if words had a hidden mathematical existence. And that’s exactly the case.

Words as Points on a Map

Imagine you need to place all French words on a giant map. Not just any way: similar words must be close. “Cat” near “dog”, “king” near “queen”, “Paris” near “France”.

To achieve this, you decide to use feature axes. Like on a geographical map with latitude and longitude, each word will have coordinates. But instead of “north-south” and “east-west”, your axes represent meaning traits:

- A “royalty” axis (from “beggar” to “emperor”)

- A “gender” axis (from “masculine” to “feminine”)

- An “age” axis (from “baby” to “centenarian”)

- And hundreds of others…

The word “king” would have coordinates like:

- Royalty: 9/10 (very royal)

- Gender: 2/10 (masculine)

- Age: 7/10 (rather old)

The word “queen” would be almost in the same place, but with a change:

- Royalty: 9/10 (still very royal)

- Gender: 8/10 (feminine)

- Age: 7/10 (similar approximate age)

This is what we call an embedding: transforming a word into a list of numbers that capture its meaning.

The Problem This Solves

For decades, computers treated words as unlinked labels. “King” and “queen” had nothing in common for a machine. Neither did “cat” and “dog”.

For an AI to understand language, it must grasp that:

- “King” and “monarch” are close

- “King” and “queen” share royalty but differ by gender

- “King” and “carrot” have nothing to do with each other

Embeddings solve this problem by giving geometry to language. Similar words become close points in a mathematical space. And this proximity enables calculations.

Word Arithmetic Explained

Let’s return to our equation: king - man + woman = queen.

Imagine three simplified axes:

- Royalty axis (horizontal)

- Gender axis (vertical)

- Age axis (depth, which we’ll ignore to simplify)

| Step | Word | Royalty | Gender | Notes |

|---|---|---|---|---|

| 1 | king | 9/10 | 2/10 | Masculine / Position: top right |

| 2a | man (to subtract) | 5/10 | 2/10 | Neutral (can be anyone) / Masculine |

| 2b | After subtraction | 4 | 0 | 9-5 / 2-2 (neutral now) |

| 3a | woman (to add) | 5/10 | 8/10 | Neutral / Feminine |

| 3b | Final result | 9 | 8 | 4+5 / 0+8 |

We removed the “masculine” trait and part of social normality, then added the “feminine” trait.

These are exactly the coordinates of queen!

Why It Works: Directions Have Meaning

The magic is that differences between words capture pure relationships.

“Man” - “woman” creates a vector (an arrow) representing the change from masculine to feminine gender. This arrow has the same direction and length as “king” - “queen”, or “uncle” - “aunt”, or “actor” - “actress”.

It’s as if language had universal directions:

- The “masculine → feminine” direction

- The “singular → plural” direction

- The “present → past” direction

By navigating according to these directions, we can explore relationships between words mathematically.

The Running Example: Paris and Capitals

Let’s take another case: “Paris - France + Italy = ?”

| Step | Word | Capital | Frenchness | Size | Notes |

|---|---|---|---|---|---|

| 1 | Paris | 10/10 | 9/10 | 8/10 | Starting point |

| 2a | France (to subtract) | 5/10 | 10/10 | 9/10 | Country, not city / Large country |

| 2b | After subtraction | 5 | -1 | -1 | 10-5 / 9-10 / 8-9 |

| 3a | Italy (to add) | 5/10 | 1/10 | 8/10 | Not French |

| 3b | Final result | 10 | 0 | 7 | 5+5 / -1+1 / -1+8 |

We extracted “the essence of capital” by removing the French context, then added the Italian context.

The word closest to these coordinates? Rome.

How AI Learns These Coordinates

You might wonder: who decides that “king” is worth 9/10 in royalty? No one.

AI learns these coordinates automatically by reading billions of sentences. It uses a simple principle: words that appear in similar contexts have similar meanings.

If AI reads:

- “The king wears a crown”

- “The queen wears a crown”

- “The monarch wears a crown”

It deduces that “king”, “queen”, and “monarch” must be close in coordinate space, because they share the same neighbors (“wears”, “crown”).

Algorithms like word2vec adjust the coordinates of millions of words so this rule is respected everywhere. After days of calculation, words have found their natural place on the map.

The Difference with Modern Transformers

So far, we’ve talked about static embeddings: “king” always has the same coordinates.

But modern systems like ChatGPT use transformers, where coordinates change according to context.

Take the word “bank”:

- “I deposited money at the bank” → coordinates close to “finance”

- “I sat on the bank of the river” → coordinates close to “shore”

In a transformer, “bank” doesn’t have a fixed position. Its coordinates are recalculated for each sentence, depending on neighboring words. The attention mechanism (another fascinating subject) enables these dynamic adjustments.

The arithmetic “king - man + woman” still works, but it becomes more subtle: “king“‘s coordinates now depend on the sentence where it appears.

Journey Recap

You’ve just understood how AI transforms words into mathematics:

- Each word becomes a point in a space with hundreds of dimensions

- Each dimension captures a meaning trait (royalty, gender, etc.)

- Similar words are close points

- Subtracting or adding words modifies these coordinates

- Relationships between words become geometric directions

“King - man + woman = queen” isn’t magic: it’s navigation in the space of meaning.

Pedagogical Simplifications

To make this concept accessible, I’ve made several intentional simplifications:

What has been simplified:

-

The number of dimensions: I talked about 3-4 axes (royalty, gender, age) when real embeddings have 300 to 1000. Impossible to visualize 768 dimensions, so we reduce to what our brain can imagine.

-

Dimension interpretability: I named the axes (“royalty”, “gender”). In reality, dimensions are learned automatically and don’t have a clear name. Dimension 247 doesn’t obviously mean “royalty”. Some dimensions capture fuzzy combinations of several traits.

-

Calculation precision: I used scores out of 10 to simplify. Real embeddings are decimal numbers between -1 and 1 (or other scales), with extreme precision.

-

Word2vec complexity: I said “AI reads sentences and learns”. In reality, word2vec uses neural networks that predict neighboring words, with complex mathematical functions (softmax, gradient descent).

-

Transformers: I mentioned that embeddings become contextual, but I didn’t explain the attention mechanism that enables this. That’s another entire article.

Why these simplifications are OK:

- The intuition remains accurate: Words are really points in a multidimensional space, and arithmetic really works.

- The principles are true: Dimensions capture semantic traits, even if it’s less clear than “royalty” or “gender”.

- Progression is respected: Understanding static embeddings is a prerequisite for understanding transformers.

What remains rigorously exact:

- Embeddings transform words into numerical vectors

- Similar words have close vectors (Euclidean distance)

- Vector arithmetic captures semantic relationships

- “King - man + woman” really gives a vector close to “queen” in word2vec

- Transformers make these embeddings contextual

If you remember that words have geometry and that relationships between words are directions, you’ve understood the essentials. The rest are technical details to refine this intuition.

Going Further

Now that you’ve grasped the principle, questions open up:

-

Do all analogies work? No. “King - man + woman” is a famous example because it works well, but many analogies fail. Why are some relationships captured and others not?

-

How to visualize 768 dimensions? Techniques like t-SNE or UMAP project embeddings into 2D to see them. But we lose information. What do we keep, what do we lose?

-

Biases in embeddings: If AI learns from human texts, it also learns our prejudices. “Doctor - man + woman” sometimes gives “nurse” instead of “doctor”. How to clean these biases?

-

Contextual vs static embeddings: In a transformer, how exactly does context modify coordinates? This is the role of the attention mechanism, a fascinating subject to explore next.

-

Beyond words: We can create embeddings for sentences, paragraphs, images, even chemical molecules. What changes when encoding more complex objects?

You now have the foundations to explore these territories. Word arithmetic is only the beginning of a world where meaning becomes calculable.

Web Resources

-

Illustrated Word2Vec by Jay Alammar: exceptional interactive visualizations on word2vec and embeddings

https://jalammar.github.io/illustrated-word2vec/ -

Word Embedding Demo by Carnegie Mellon University: interactive tutorial to experiment with word analogies

https://www.cs.cmu.edu/~dst/WordEmbeddingDemo/tutorial.html

AiBrain

AiBrain